This Industry Viewpoint was authored by Alex Vayl, VP Business Development for NSONE

Website and application delivery has gone through dramatic shifts over the past 25 years. One of the more recent catalysts is a trend towards distributed application deployments, enabled by the advent of IaaS and infrastructure automation, which have dramatically reduced the costs and complexity of deploying applications in multiple markets.

Distributed deployment of an application can help minimize downtime and improve performance, and is more accessible now than ever before. Users can deploy servers in different parts of the world in minutes and leverage a multitude of software frameworks, databases, and automation tools designed for use in decentralized environments.

The Disconnect

While we’ve seen significant progress in the last half decade toward distributed applications in infrastructure and the application stack, there’s been a stagnation in the same timeframe in the tools for effectively routing traffic to a distributed application.

Today, traffic management is often accomplished through prohibitively expensive networking techniques like BGP anycasting, capex-heavy hardware appliances with global load balancing modules, or managed DNS platforms with extremely limited traffic management functionality.

So how do we solve this disparity between building a distributed application and easily controlling how your traffic gets directed to that application?

As it turns out, DNS is an excellent step in the application delivery process to enable powerful, granular traffic management. We have simply been limited by the capabilities of the services on the market, because they were never designed with today’s applications in mind.

Genesis

In the 1990s, DNS had already long been one of the core protocols of the Internet. Its role was simple yet critical — translate human-readable domain names to server IP addresses or other lower level service information.

The Internet was still in its infancy. Websites were hosted on a single server, in a single location, and mostly consisted of static content.

Because of relatively slow ISP connection speeds, performance constraints revolved around bandwidth limitations and server specifications. There was little need for complex or dynamic traffic routing to websites or web applications, because most websites were hosted out of a single datacenter and needed to be fairly lightweight.

The Distributed Audience

After the dot-com bubble, high-speed Internet connections became the norm, and we quickly saw the emergence of a more dynamic “Web 2.0”.

Bandwidth and server performance was gradually becoming less of a problem. Fatter pipes, more RAM and faster CPUs addressed these bottlenecks. Moore’s and Nielsen’s Laws further predicted these concerns would become negligible over time.

However, as websites and applications shifted their designs to be more responsive and interactive, and as audiences became increasingly international, we started encountering a new barrier — latency.

High latency can cause applications to perform poorly and websites to load slowly, even if a user has a high-speed Internet connection. Unlike bandwidth, latency is dictated by the speed of light. So if a user in Hong Kong is accessing your application delivered from a datacenter in New York, the speed of light combines with protocol round trips to result in a poor user experience, even if the user has world-class connectivity from their ISP.

The Distributed Application

The next logical step in cheating latency brings us to the present day.

Major operators of internet infrastructure have long sought to beat the speed of light by moving applications closer to users. For example, CDN and DNS operators have worked to shave precious milliseconds from requests by placing content nodes or nameservers in several locations, leveraging complex, expensive technologies like IP anycasting to direct requests to “nearby” service endpoints. But these techniques have limitations for general purpose applications, and they’re incredibly expensive and complex to operate and maintain.

Since the early 2000s, applications leveraging a CDN have been able to beat the speed of light for certain kinds of content, usually static: the CDN operates complex and expensive distributed infrastructure to push bits to global users more effectively. But CDNs have never been good at delivering dynamic content.

To truly push dynamic applications to the edges of the internet and minimize the impact of latency on user experience, developers have more and more frequently deployed their own “delivery networks” similar to CDNs. Now, the application itself is distributed across several markets. Over the last half decade, database technologies, increased bandwidth, IaaS offerings, automation tools, and application frameworks have evolved to enable this kind of distributed application, and more and more applications are built from the very beginning to be distributed.

What hasn’t kept pace are the tools for getting traffic to these distributed application endpoints. Still, developers are stuck with the expensive approaches used by CDN, DNS, and other big infrastructure operators — or with simplistic techniques like “geographic routing” which attempt to route users based on metrics (like distance) that are only loosely related to performance on the internet. While the DNS protocol is a great tool for traffic management (DNS lookup is the first and best opportunity to make an application endpoint selection), DNS technology has not evolved at the pace of applications themselves.

Enter “DNS 2.0”

To help bring traffic management tools to parity with today’s application deployment and delivery paradigms, the new generation of modern DNS providers will enable developers to take full control over how users are serviced by a distributed application.

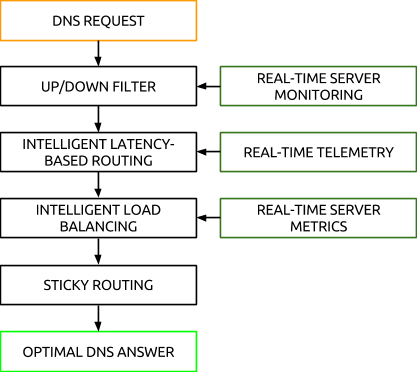

Traffic management tools will be delivered in an RFC-compliant DNS stack, enabling developers to take granular, real-time control over their traffic without any complex hardware, expensive networking setup, or specialized expertise. This technology will be designed with truly modern applications in mind, acting on telemetry to shift traffic between application endpoints in response to conditions in application infrastructure and on the internet at large to optimize performance according to an application’s specific requirements.

This modern approach to DNS and traffic management is possible because of two powerful new ideas:

This modern approach to DNS and traffic management is possible because of two powerful new ideas:

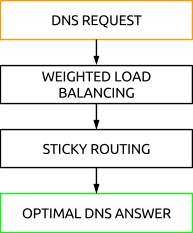

First, the ability to compose complex traffic routing algorithms from simple building blocks, combining a variety of techniques — ranging from simple geographic routing, to load shedding, to eyeball telemetry routing — to shift traffic between application endpoints in approaches perfectly tailored for the application.

Next, we can feed the above mentioned traffic routing algorithms with real-time telemetry about the application infrastructure or the internet at large, using internal and/or external monitoring tools. Now, DNS based traffic management acts on far more than just static geographic mappings; It can automatically adjust traffic based on live metrics like network latency, server load, application response times, or even IaaS spending commits.

Next, we can feed the above mentioned traffic routing algorithms with real-time telemetry about the application infrastructure or the internet at large, using internal and/or external monitoring tools. Now, DNS based traffic management acts on far more than just static geographic mappings; It can automatically adjust traffic based on live metrics like network latency, server load, application response times, or even IaaS spending commits.

Modern DNS services are bringing dynamic, intelligent technology to application delivery. This technology dramatically lowers the barrier for developers to deploy modern, high performance applications — whether they’re building the next big thing, or already have.

NSONE is a next generation DNS and traffic management provider based in New York City. Launched in 2013, NSONE has quickly won major customers including Imgur, Collective Media, OneLogin, and many more with its unique blend of high performance DNS and granular but easy to use traffic management.

NSONE is a next generation DNS and traffic management provider based in New York City. Launched in 2013, NSONE has quickly won major customers including Imgur, Collective Media, OneLogin, and many more with its unique blend of high performance DNS and granular but easy to use traffic management.

If you haven't already, please take our Reader Survey! Just 3 questions to help us better understand who is reading Telecom Ramblings so we can serve you better!

Categories: Industry Viewpoint · Internet Traffic

Alex, How do you figure that Anycasting is expensive? Not sure I follow your rationale on that one. It looks like you have built your business around something which is a mostly black art today and even some of the best CDNs and Content Owners do not do it well. So kudos to you there.

Hi Michael. Thanks for the comment.

I say that anycasting is expensive because it involves finding a provider that offers you the ability to do BGP announcement (not very common and not cheap), you’ll need to have your own IP space, and you’ll need a networking expert to manage all of this for you.

To many businesses this is prohibitively from a cost and manpower standpoint.